Scientists from South Korea’s Gwangju Institute of Science and Technology (Gist) say they have created strategies to make passengers feel safer in self-driven vehicles.

Automated vehicles (AVs) may be part of the future urban mobility mix, but passenger trust remains a challenge. Providing timely, passenger-specific explanations for the decisions AVs make can bridge this gap - so Gist’s researchers have investigated a method of increasing passengers’ sense of safety and confidence in AV trips.

Their TimelyTale is a novel dataset designed to capture real-world driving scenarios and passenger explanation needs. The goal, they say, is to have the in-vehicle multimodal dataset in all AVs.

Fostering trust

Driverless cars enable human users to engage in non-driving related tasks such as relaxing, working or watching multimedia en route. However, widespread adoption is hindered by passengers' limited trust. Understanding why AVs react a certain way can foster trust by providing control and reducing negative experiences. However, explanations must be informative, understandable and concise to be effective.

Existing explainable artificial intelligence (XAI) approaches majorly cater to developers, focusing on high-risk scenarios or complex explanations, which are both potentially unsuitable for passengers. Instead, passenger-centric XAI models need to understand the type and timing of information needed in real-world driving scenarios, said Professor SeungJun Kim, director of the Human-Centered Intelligent Systems Lab at Gist.

"Our research lays the groundwork for increased acceptance and adoption of AVs” SeungJun Kim, Gwangju Institute of Science and Technology

TimelyTale was created to include passenger-specific sensor data for context-relevant explanations which make people feel more confident about their trip. “Our research shifts the focus of XAI in autonomous driving from developers to passengers,” said Kim. “We have developed an approach for gathering passenger's actual demand for in-vehicle explanations and methods to generate timely, situation-relevant explanations for passengers.”

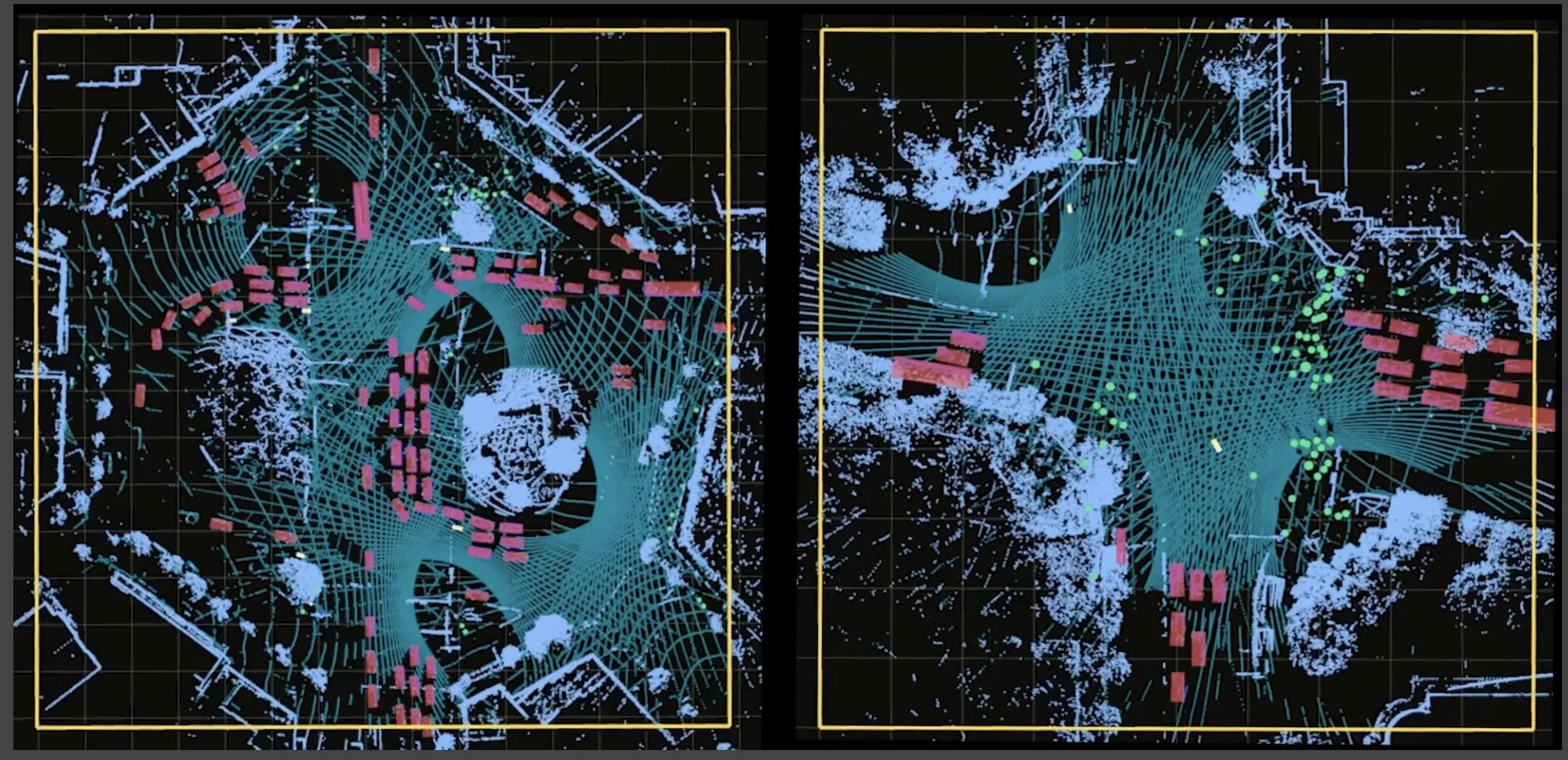

The researchers first studied the impact of various visual explanation types, including perception, attention and a combination of both - and their timing - on passenger experience under real driving conditions by using augmented reality.

They found that the vehicle’s perception state alone improved trust, perceived safety, and situational awareness without overwhelming the passengers. They also discovered that traffic risk probability was most effective for deciding when to deliver explanations, especially when passengers felt overloaded with information.

Telling a TimelyTale

Building upon these findings, the researchers developed the TimelyTale dataset. This approach includes various types of data: exteroceptive (regarding the external environment, such as sights and sounds); proprioceptive (about the body’ positions and movements) and interoceptive (about the body’s sensations such as pain).

The data was gathered from passengers using a variety of sensors in naturalistic driving scenarios, as key features for predicting their explanation demands. Notably, this work also incorporates the concept of interruptibility, which refers to the shift in passenger focus from so-called ‘non-driving-related’ tasks to ‘driving-related’ information. The method effectively identified both the timing and frequency of the passenger’s demands for explanations as well as specific explanations that passengers want during driving situations.

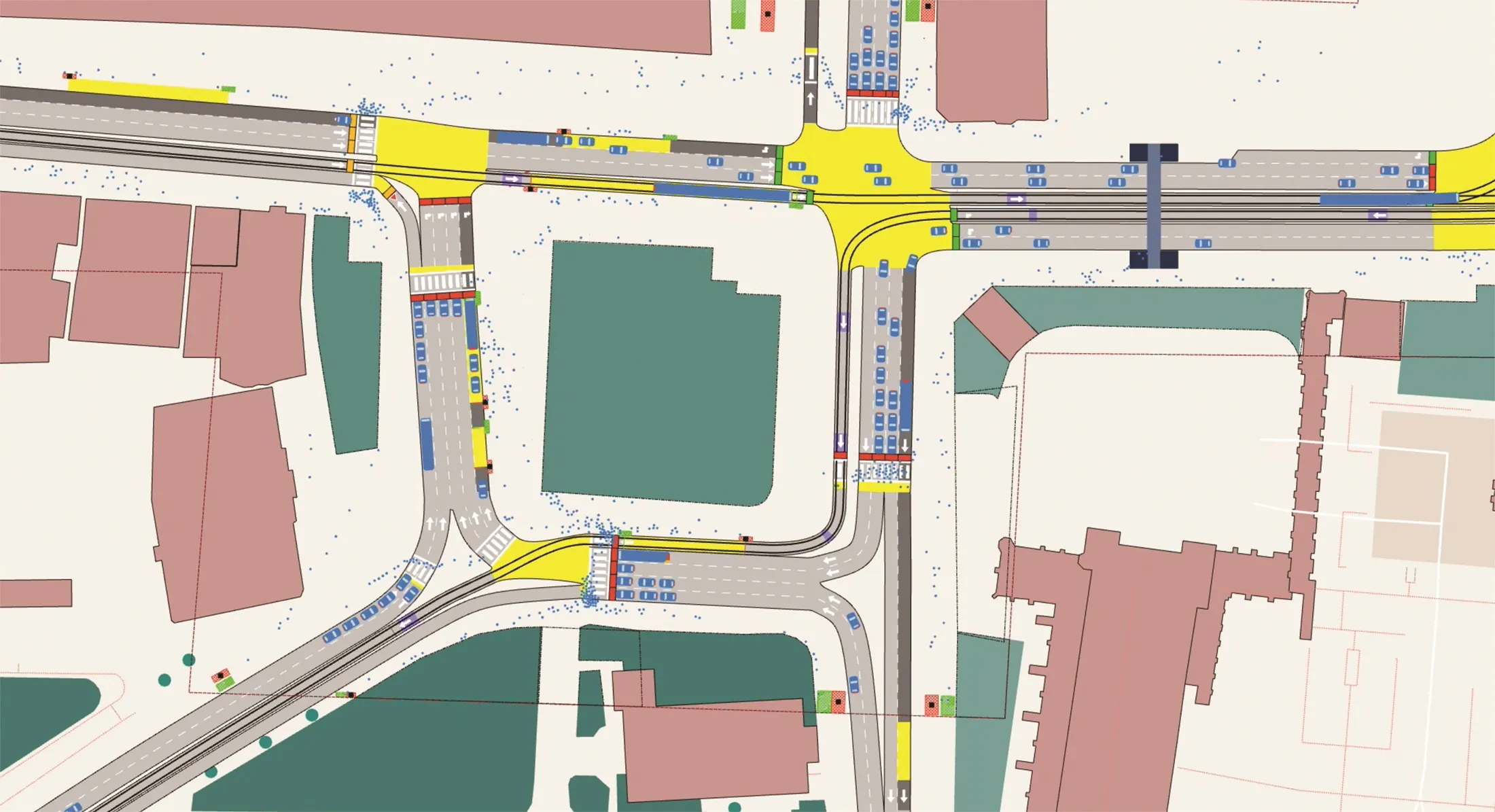

Using this approach, the researchers developed a machine-learning model that predicts the best time for providing this information. Additionally, as proof of concept, the researchers conducted city-wide modelling for generating textual explanations based on different driving locations.

"Our research lays the groundwork for increased acceptance and adoption of AVs, potentially reshaping urban transportation and personal mobility in the coming years," concludes Kim.